Optimising and Scaling your Confluent Cluster

Author: Oscar Moores

Release Date: 04/08/2025

Confluent is not just software - Founded by the minds behind Apache Kafka, it is an array of many different interlinked physical and logical components: brokers, topics, partitions, ksqldb servers, Kafka streams applications, producers and consumers (just to name a few) all working together to form an incredibly versatile data streaming platform.

In this blog, we’ll be diving into two of the aforementioned components: the producer and the consumer, focusing on how they can be optimised and scaled to enable getting data into and out of a cluster. Whilst we are getting to grips with producers and consumers, it’s important to recognise that confluent is a system with many moving parts and although I am going to be focusing on producers and consumers, the reality of Confluent and Kafka is that all of the components are linked. Thinking about getting data onto a cluster as something only the producer has a hand in is akin to planning a party and only thinking about what cars everyone will drive to get there: when people arrive they will find there is not enough parking.

To optimise or scale requires finding what is bottlenecking the flow of data and changing settings or adding components to increase throughput, meaning you can get more data into or out of your cluster quicker - this will often mean configuring brokers or topic settings (sometimes even adding a few partitions).

Increasing Throughput: Configuring Producers

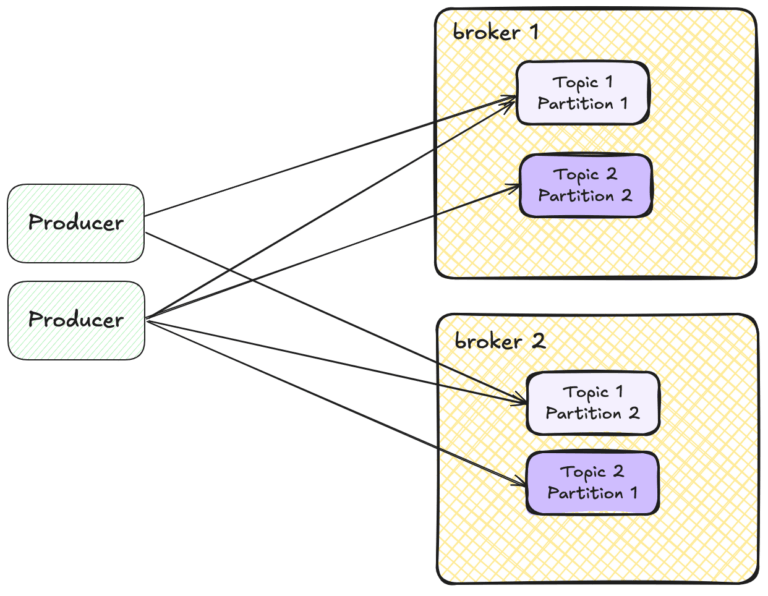

Producers are how you get data into a cluster, picking a topic to send data to then serializing it and deciding which partition the data is sent to. These topics then store the records on the cluster on partitions, which are distributed across brokers in the cluster to allow scaling and resilience should a broker have a problem.

Easier to scale than consumers, you can just spin up a new producer and point it at the topic you want data sent to but this is not always necessary as with careful configuration it may be possible to increase a producer's throughput rather than starting up a new one.

A producer can cause a bottleneck in a number of ways: The producers CPU could be at capacity, maybe it doesn’t have enough memory or it's limited by how fast it can read from a hard drive. When this occurs it may be necessary to start more producers, but equally optimising the code of the producer or improving the hardware isn’t always impossible.

Producer Settings

Optimising a producer doesn’t always mean changing its code or physical hardware though there are many settings that tweaked within a producer can shake things up, although a lot of these changes can be a tradeoff that sometimes might not be ideal if they conflict with business needs.

We’re going to only cover a small selection of the more important settings, but more can be found in the producer configuration reference:

https://docs.confluent.io/platform/current/installation/configuration/producer-configs.html

ACKS and Exactly Once (EO) Delivery

A big way of slowing down a producer is using an exactly once delivery guarantee, that can be enabled by setting ‘acks’ to all, ‘max.in.flight.requests.per.connection’ to less than 5,‘enable.idempotence’ to true and using transactions.

Disabling exactly once delivery and acks can massively speed up production of messages by dropping the requirement to check sequence numbers, verify delivery with other brokers and handle transactions. Of course, this is not always possible, sometimes you need exactly once delivery or atomic delivery of a set of messages, but it is worth considering what these are set to if these features are not required.

Latency for Throughput: Considerations of the Tradeoff

In Kafka and Confluent there is often a duality between latency and throughput, many settings can increase the latency of a producer (this also applies to consumers) and in exchange increase throughput.

Two of these settings that are closely linked are batch.size, which sets the upper limit for how many bytes can be sent in a batch of messages and linger.ms, which sets how long a producer will wait for a batch of messages to fill.

If these settings are increased they will increase how many messages are sent in batches, increasing throughput by reducing the overhead caused by sending a high volume of batches, but to do this the time spent waiting for messages to be batched is increased which translates to higher latency. A trade off.

The Impact of Brokers on the Production and Consumption of Messages

There are many potential ways a broker can be the bottleneck in the producing and consuming of records. The most obvious one is that the broker's hardware can't handle the load it's under, whether its cpu is overloaded, there isn't enough memory or it can’t perform I/O operations quick enough. If this is the case it is possible to upgrade hardware, but the distributed nature of Confluent makes it easy to add more brokers and use the kafka-reassign-partitions tool to move some partitions to the new brokers, spreading the load over the new hardware.

Request Handler Threads

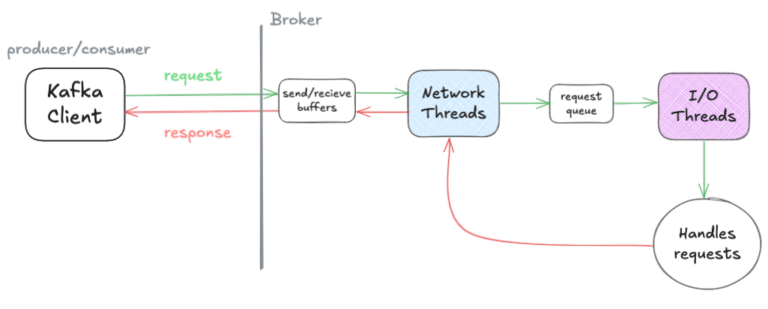

In the broker there are 2 types of threads that manage and handle requests from consumers and producers: the network threads and the I/O threads.

Requests from producers and consumers will get sent to the broker and picked up by the network threads which will queue the request so the I/O threads can carry out the request.

It is possible for the network and I/O threads to be overworked, slowing down how quickly records can be produced and consumed to/from the broker without the brokers cpu being at capacity.

The load on the threads can be monitored using metrics.

If the metric ‘NetworkProcessorAvgIdlePercent’ is low it is an indicator that the load on a broker's network threads is high, and could be a bottleneck for performance. To enable more network threads increase the value of the ‘num.network.threads’ setting.

I/O threads do not have a metric that can directly be used to monitor them, instead a pair can be used to get an idea of how well they are coping: ‘RequestQueueSize’ and ‘RequestQueueTimeMs’. These metrics monitor the queue of requests that the I/O thread takes from, its size and how long requests have been in it. If these metrics are high it probably means the I/O threads are struggling as it is taking a long time for requests to be handled. To increase the number of I/O threads increase ‘num.io.threads’ on the broker.

Adding Partitions

The number of partitions can have a serious impact on how quickly data can be produced and consumed from a topic.

A topic with a single partition can only be present on a single broker and read from by one consumer per consumer group, already a huge problem when it comes to scaling up throughput of said topic as it is limited to being present on 1 broker and doesn’t allow multiple consumers from the same group to read from the cluster.

Increasing the number of partitions needs to be done carefully to avoid disruption and with an awareness of how it can affect a data pipeline.

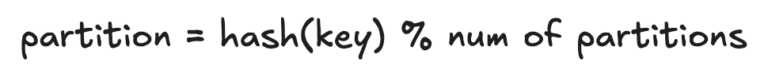

Partitioning hashes a records key and finds the remainder when dividing the hash by the number of partitions a topic has. The result from this is the number of the partition the record will be sent to, if the number of partitions changes then records with the same key will not always go to the same partition it did before the number of partitions was changed.

This could be a problem if, for example, you are aggregating data in Kafka streams which requires the records with the same key to be in the same partition.

The easiest way to deal with this problem is to anticipate how many consumers you will need and make sure you have at least this many partitions when you create the topic, but an unforeseen increase in demand could mean you need to add more partitions after creation.

If you have to add more partitions to an already existing topic and need data with the same key to always be in the same partition one possible solution is creating a new topic with the desired number of partitions and using a tool like ksql or Kafka streams to read the data from the current topic and produce it to the new topic. Reconsuming the data like this means all records will be partitioned again, putting each key into the same partition.

Consuming Data from a Confluent Cluster

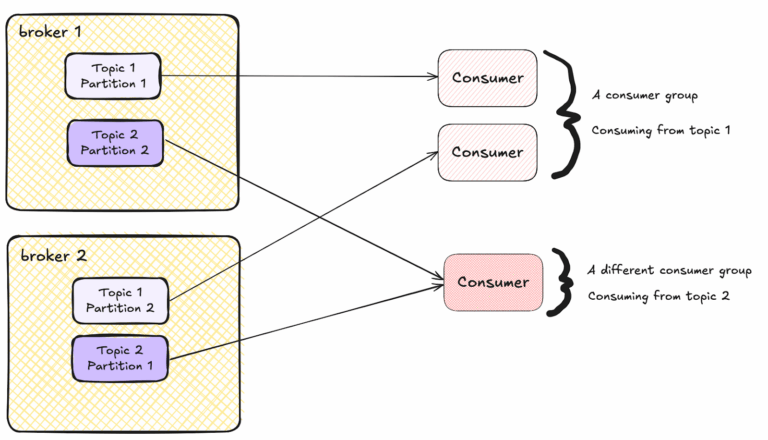

Consumers are the opposite to the producer, polling a topic to get data from it.

Much like the producer the consumer can be optimised by changing its settings and changing broker settings. It also can suffer from an overworked CPU, lack of memory or insufficient I/O to storage, but fixing these issues can be a little more complex than it is on the producer due to how partitions and consumers interact.

A consumer group (a group of consumers configured to consume from the same group of topics) will subscribe to a set of topics and the consumer group leader will assign each partition to 1 consumer. The partition cannot be shared between multiple consumers. This means when scaling consumers you can’t always just add more because when a consumer group allocates partitions to consumers it only gives each partition 1 consumer, so if you have more consumers then partitions the excess will be completely idle. Dealing with this is covered more in the ‘adding partitions’ section.

If you need to scale your data output from Confluent one of the first things to check is the load on your consumers, monitoring the cpu, memory and any storage. If these are at capacity then more consumers may need to be added to share the load, the hardware upgraded, the consumer code/settings optimised or more consumers added.

Consumer Settings

Much like with producers, consumers have many settings that can increase throughput but these settings can also have tradeoffs that can affect latency or network utilisation.

The settings ‘fetch-min-bytes’ and ‘fetch-max-bytes’ control how many bytes can be returned by one poll from a consumer and the similar settings ‘min.poll.records’ and ‘max.poll.records’ do the same accept control the number of records instead of the number of bytes. Increasing these can increase the time it takes for a batch of records to be built up and sent, which can increase throughput but also increase latency.

More settings for the consumer can be found here:

https://docs.confluent.io/platform/current/installation/configuration/consumer-configs.html