How to Build a Data Pipeline with Confluent

Author: Sam Ward

Release Date: 29/09/2025

Defining a Data Pipeline

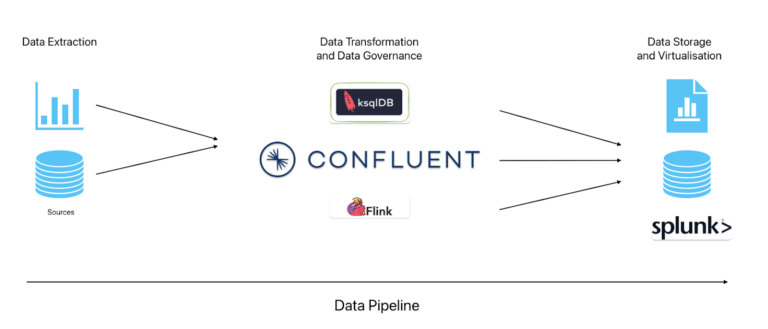

To first get started with building data infrastructure, we first have to be familiar with the basic elements and concepts of the data pipeline. A data pipeline at its core is linking each stage of data processing into one streamlined flow from data ingress to data egress, with any form of data transformation in between.

There are three main steps in the typical understanding of a Data Pipeline, and these are: Data Extraction, Data Governance, Data Transformation and Data Virtualisation. Let’s go through these one by one, so we can see some examples and how they interact with Confluent, our go-to data streaming platform.

Stages of a Data Pipeline

Data Extraction: This is the phase where we collect data from various sources, from tables in a database, to IoT devices. In a typical data pipeline, these sources can either be streams of data (such as a real-time live collection of data every second from an IoT device) or batches of data (such as a static table). With Confluent, we use continuous streams of data so we’ll focus on this type of data pipeline in this blog post.

Data Transformation: This is where we process data and perform a wide range of different tasks upon the data. This includes: extracting data from stream and processing it into a specified format, enriching a stream of data with additional metadata and filtering out specific events based on values detected. Flink and ksqlDB are examples of stream processing frameworks/engines that allow developers to have fine control over the data their system processes. This stage does not necessarily involve full data transformation, for example a specific pipeline could simply reformat data ready for storage/virtualisation.

In this stage, we also consider Data Governance but with Confluent this is built-in with the use of topics, stream lineage, schema validation and schema linking. For more information on what Data Governance is within Confluent, see the following article written by Confluent themselves.

Data Storage: Once our data has been processed and we have defined our data types and conditions, we can store our data in a system such as Amazon S3, MongoDB or any JDBC compatible database with Confluent’s sink connectors. This is often for further analysis of data (another , but this can also be output simply for use by endpoint applications.

Building a Pipeline with Confluent

When building a pipeline with Confluent, we have to consider a few things: where the data is coming from and going to (to ascertain what type of source/sink connectors we’ll need to spin up), what level of complexity the transformations we’ll perform against the data will be and where the transformed data will end up, be it analytical platforms, a data warehouse or fed into dashboards.

1) Sign up for Confluent Cloud, which is completely free for basic clusters, allowing you to experiment before you fully commit to the platform

2) Launch a cluster with the cloud provider of your choice (AWS, Google Cloud, Microsoft Azure)

3) Set up a client and configure either a datagen (automatic test data generation through a prebuilt connector) or your own data source

4) Set up your transformations via either a ksqlDB cluster or Flink (through a compute pool) - this can be as simple or complex as you like, but if you’re going for simpler transformations ksqlDB would be the simpler/ideal choice

5) Set up a Sink Connector for your storage/analytical target location (be it Splunk, a JDBC database, S3, endpoint application, etc)

Use Cases: Data Pipelines with Confluent

Financial Services and Fraud Detection: Confluent can be used to build a data pipeline around streams of financial transactions, where we use ksqlDB/Flink to analyse incoming transactional data to detect possible cases of fraud, and perform a series of preventative measures to ensure the account is protected from further harm (such as temporarily blocking any new transactions and alerting the account/card holder).

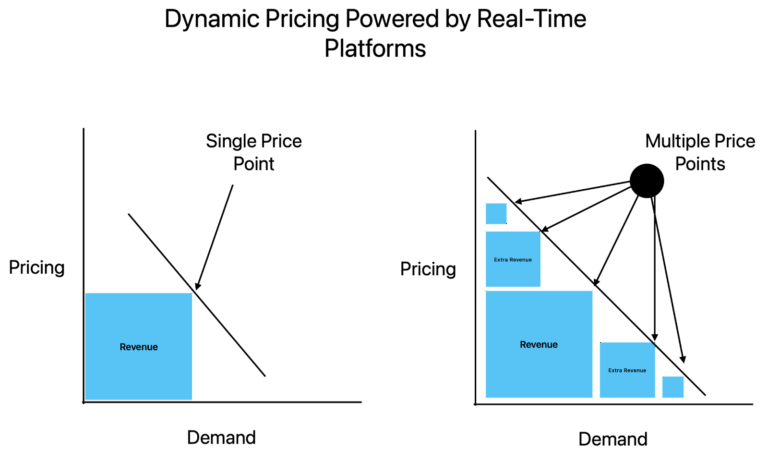

Real-time Analytics (eCommerce): Confluent can be used to build a data pipeline around streams of live data such as eCommerce transactions in order to better understand a company’s customers’ needs to extract the maximum possible business value from their website. An example of this would be Dynamic Pricing, where live statistics like amount of people in a queue for a ticket website affects the price of the ticket, driving profit for artists and promoters up based on demand.

Real-time Analytics (Gambling): Confluent can utilise real-time data, such as live statistics from football matches, to influence live odds in gambling apps.

Standard Database Pipelines: Confluent can also be used to simply reformat data and output it to a new system with few transformations in between in order to keep your data flow linked in one universal platform. Confluent not only allows its users to utilise their cutting-edge service to process real-time streams of data, but a more standard batch processing approach is also supported.

In Practice: What Does a Pipeline Look Like?

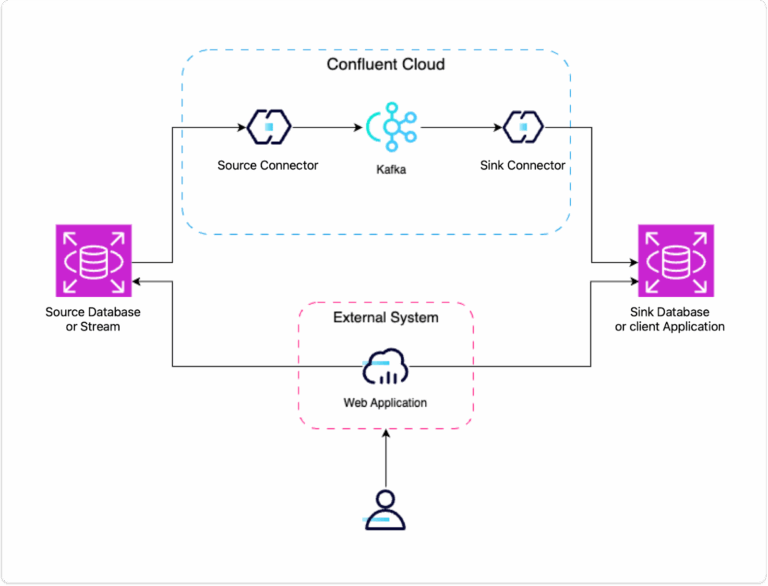

Let’s dive into what one of these pipelines looks like in a practice environment, and what components we’ll need to set up a very basic data pipeline:

1) Source Data (in the example, this will be a PostgreSQL database)

2) Source Connector (or tool to get the data into your Data Streaming Platform of choice)

3) Data Platform of choice (Confluent Cloud will be ours)

4) Sink Connector (or tool to get the data out of your Data Streaming Platform of choice)

5) Sink or Client Application (in the example, this will be a PostgreSQL database)

6) (Optional) - We will use an External System in the form of a web application to interact with the databases

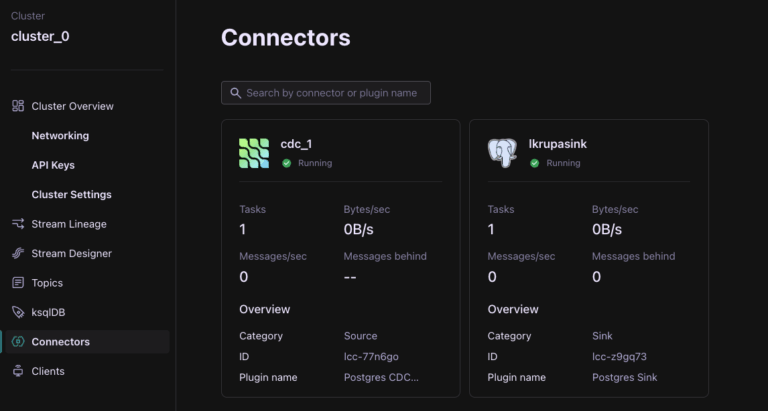

As you can see from the image above, we’ve got two connectors in Confluent Cloud running, one source (PostgreSQL CDC Source Connector) and one sink (PostgreSQL Sink Connector). To see how to set these up and get some hands-on experience yourself, you can join one of our Confluent 101 Workshops, hosted by me!

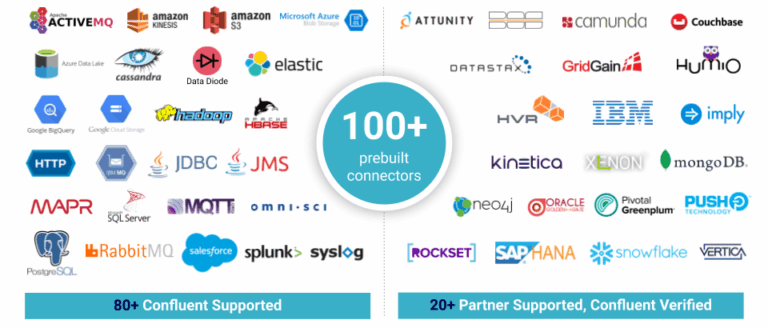

If you’re curious about what source and sink connectors are available with Confluent Cloud, see the list here.